Development of AI and MT – NMT

In the early 1990s, due to the success of deep learning and neural networks in the field of artificial intelligence, machine translation introduced these new technologies, thus evolving statistical machine translation into neural machine translation (NMT). Neural machine translation is a translation technology based on deep learning, with the core principle of using neural networks to simulate the connections of neurons in the human brain to process natural language. NMT employs an encoder-decoder architecture to convert the source language text into a set of feature vectors, which are then translated into the target language text by the decoder. In this process, the seq2seq model and attention mechanism are key technologies, responsible for learning the mapping relationship from the source language to the target language and focusing on critical information in the source text during translation. The advantage of NMT is its ability to automatically learn the intrinsic rules of languages, handle complex linguistic phenomena, and provide higher translation accuracy and fluency. However, NMT requires large amounts of training data and computational resources and faces challenges in model interpretability.

Let's briefly review the development history of neural machine translation.

As early as 1982, artificial neural networks (ANNs) began to rapidly develop like mushrooms after the rain, bringing new hope. The main features of ANNs are distributed information storage and parallel information processing. Using connectionism, they have self-organizing and self-learning capabilities, offering new methods for processing information that traditional symbolic methods struggled with.

In 1987, the first International Conference on Neural Networks in the United States marked the birth of this new discipline.

Since the 1980s, investments in neural network research in Japan and European countries have gradually increased, further promoting neural network studies. The behaviorist school of artificial intelligence, represented by R. A. Brooks of MIT, proposed the concept of intelligence without representation and reasoning, asserting that intelligence can emerge from interactions with the environment, making representation and reasoning unnecessary. They suggested that developing adaptive "insect-like" machines was more realistic than creating idealized "robots." Using big data as the external environment helps advance this "intelligence without representation and reasoning."

The academic community in AI realized that traditional AI methods were limited to using successful experiential knowledge to handle problems, thus exploring combining symbolic mechanisms with neural network mechanisms and introducing agent systems.

The concept of Recurrent Neural Networks (RNN) dates back to 1982, when John Hopfield proposed the Hopfield network. However, the RNN as we know it was defined and proposed by Michael I. Jordan in 1986 with the Jordan network. In 1990, Jeffrey L. Elman simplified the Jordan network and trained it using the Backpropagation Through Time (BPTT) algorithm, forming the widely recognized RNN model.

Click here to explore the history of CNN development.

In 1997, Hoehreiter proposed a gated RNN called Long Short-Term Memory (LSTM). LSTM includes input gates, output gates, and forget gates, enabling it to model long-term dependencies in natural language, significantly improving RNN performance.

In 2016, Google developed the Google Neural Machine Translation (GNMT) system, based on deep learning, particularly using RNN and LSTM technologies. GNMT integrates neural network system technologies, utilizing advanced training techniques to achieve substantial improvements in machine translation quality. When translating, GNMT leverages large-scale real corpora for deep learning to automatically acquire language features and rules. Thus, GNMT is a big data-based neural machine translation system.

With ongoing technological advancements, many translation software now feature voice and photo translation, exemplifying the integration of natural language processing, speech recognition, CNN, and translation technologies. However, some challenges arise: 1. Why does photo translation still seem immature, with accuracy much lower than other translation methods? 2. Why does AI still lag behind humans in dealing with CAPTCHAs?

HU XIAOFENG

Now, let's analyze these challenges in detail one by one.

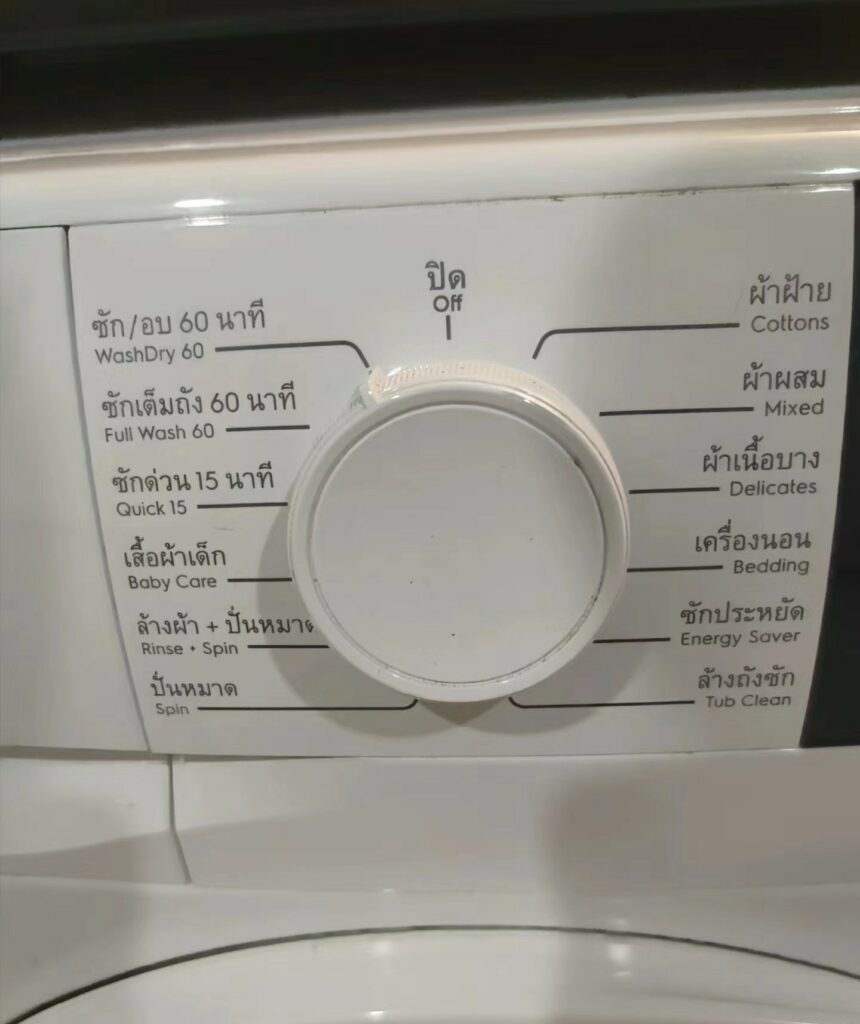

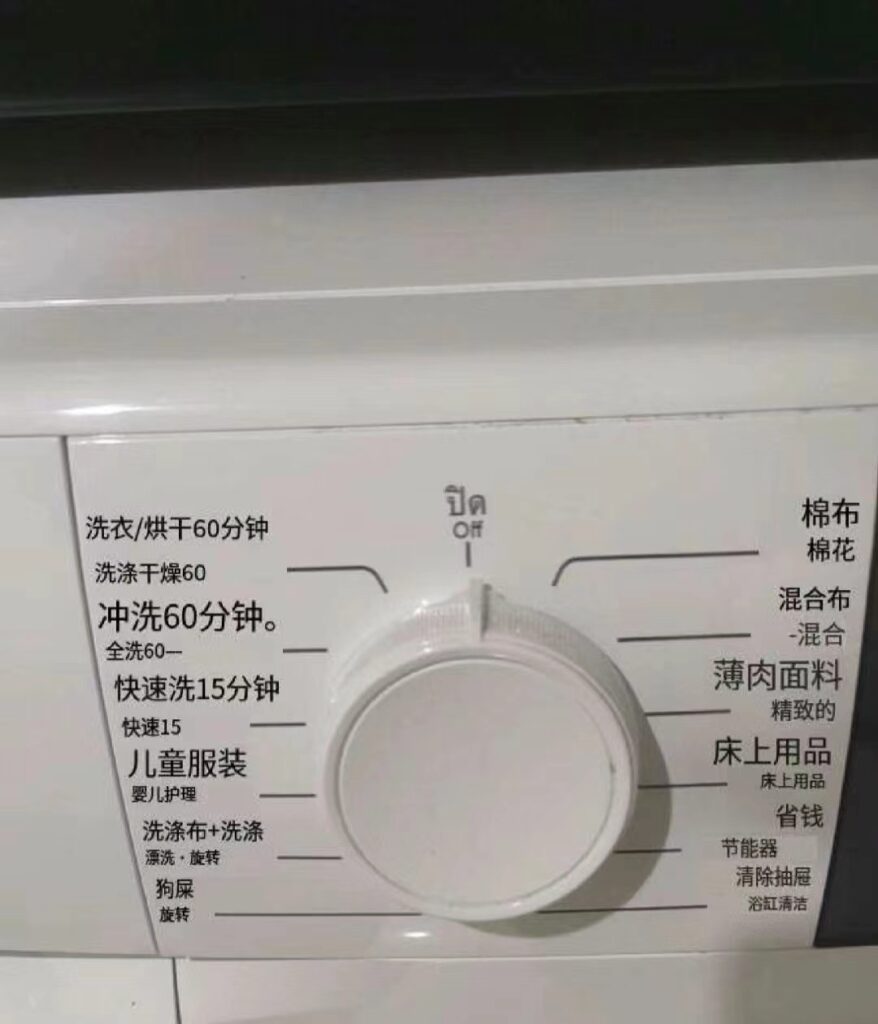

1. Why is photo translation still immature, with translation accuracy much lower than other methods?

Image source: Xiaohongshu user

Machine translation cannot compare to the human brain, which is an extremely precise instrument capable of achieving high accuracy in processing both speech and images, with excellent error correction. For machines, photo translation requires image recognition and text recognition before translation can occur. Errors often begin at the "recognition" step. The accuracy of photo translation tools can be affected by several factors, including but not limited to:

- Image Quality: Blurry, underexposed, or overexposed photos may prevent accurate text recognition, leading to incorrect translations.

- Text Angle and Orientation: Text that is not upright may result in recognition errors, affecting the translation outcome.

- Complex Backgrounds: Patterns or colors in the background can interfere with text recognition, reducing accuracy.

- Fonts and Sizes: Certain special or handwritten fonts may be difficult for recognition software to accurately read.

- Languages and Dialects: For non-standard or dialect texts, translation software may lack sufficient data support, leading to inaccuracies.

- Translation Algorithm Limitations: The software's algorithm may not handle all language structures and grammars perfectly, especially for low-resource languages.

- Context Understanding: Photo translation may lack context understanding, leading to translations that do not fit the context.

Despite these challenges, many photo translation tools continue to optimize their OCR (Optical Character Recognition) technology and translation algorithms to improve accuracy. For example, some applications claim high text recognition accuracy and offer instant translation and result editing features to enhance user experience. However, user feedback may still indicate accuracy issues in practical use.

Compared to typing text directly into a webpage or software for translation, I believe the limitations of photo translation largely depend on the recognition step. In other words, the development of CNN still needs improvement. (How can machine translation accurately translate text with line breaks? I think it requires recognizing all text first and then translating based on the entire text, similar to input text translation. What do you think, readers?)

From my personal experience and online observations of user feedback, photo translation often encounters errors with line breaks and short phrases. This indicates that despite the long history of CNN technology development and its integration with translation, current technology still has room for improvement, and photo translation quality needs significant enhancement.

2. When facing CAPTCHAs, why does AI still perform far worse than humans?

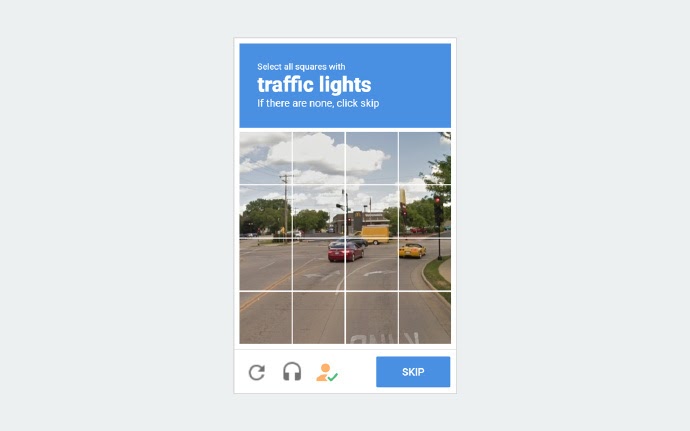

CAPTCHA stands for "Completely Automated Public Turing test to tell Computers and Humans Apart." It is a security mechanism designed to distinguish between human users and automated software (commonly known as "robots" or "bots"). CAPTCHA achieves this by requiring users to complete a simple test that is easy for humans but challenging for automated programs. Traditional CAPTCHAs often appear as image-based tests requiring users to identify distorted text or numbers or select specific objects from a series of images. Other types include audio CAPTCHA (for visually impaired users, requiring them to type the content of a played audio), logic CAPTCHA (solving simple math or logic problems), and interactive CAPTCHA (performing a simple mouse operation like dragging and dropping a puzzle). Recently, reCAPTCHA has been introduced as CAPTCHA defenses are frequently breached.

In simple terms, CAPTCHA is the familiar verification process like "Are you a robot?", "Sum of the numbers below", "Select images with bicycles", and "Slide verification." (The most annoying verification I encountered was from NAVER, which used logic questions like "What is the unit price of the item on this receipt?" combining language and intelligence tests. Of course, this is easy for native speakers.)

Common types of CAPTCHAs

Humans often find such CAPTCHAs relatively simple, but how does artificial intelligence perform when cracking CAPTCHAs?

In 2011, computer scientists at Stanford University cracked Audio CAPTCHA.

As early as 2013, AI startup Vicarious announced that they had cracked CAPTCHA using their AI technology. Four years later, the company published this method in the peer-reviewed journal Science. Vicarious co-founder Dileep George stated that their algorithm, when cracking CAPTCHA and reCAPTCHA, did not use a data-intensive approach. George explained that by using Recursive Cortical Networks (a type of deep learning image recognition model), they needed 5,000 times fewer images to train their algorithm compared to other methods, while still being able to recognize text in CAPTCHA systems, handwritten digits, and text in real-world environments.

In 2014, Google officially abandoned text-based CAPTCHA systems and replaced them with the "I'm not a robot" button. This AI-based system also included secondary tests, where users sometimes had to select images containing cats or other objects from a set. However, three researchers from Columbia University used deep learning techniques to automatically recognize Google's reCAPTCHA, achieving a success rate of 70%. The researchers also mentioned that using this method to crack Facebook's image CAPTCHA yielded an accuracy rate of 83.5%.

Advances in computer vision technology have enabled computers to recognize images, rendering CAPTCHAs ineffective and obsolete.

"The situation is either that CAPTCHAs have not been cracked, and we can distinguish between humans and computers, or CAPTCHAs have been cracked, and we have solved an AI problem."

(Obsolete) CAPTCHA website

Now let's look at GPT's performance:GPT-4 attempted to hire someone to pass a human verification test and even deceived them into working for it | Jiqizhixin (jiqizhixin.com).In comparison, GPT's approach seems rather opportunistic, but does this also suggest that AI finds it easier to have humans manually fill out CAPTCHAs rather than analyzing and cracking them step-by-step? Could this be another indication that AI still needs further development?

With old CAPTCHAs facing comprehensive attacks and cracking, current CAPTCHAs are more focused on user behavior operations, such as sliding CAPTCHAs, because the battle between CAPTCHA technology and cracking techniques can no longer rely solely on images.

Sliding CAPTCHAs mainly apply user behavior analysis, focusing on analyzing the behavior patterns of users completing the operation. Complementing image recognition technology, behavior analysis evaluates various behavior data during the operation—such as the speed of dragging the slider, the regularity of the trajectory, and the overall operation time—to determine whether the operator is a human user. The core of this technology is collecting and analyzing large amounts of data from normal user operations to construct an algorithm that can distinguish between human and machine users. These models and algorithms can identify human-specific behaviors that automated scripts or bots cannot mimic, thereby enhancing CAPTCHA security.

Currently, humans are continuously developing AI to perform tasks that humans consider relatively simple. On the other hand, they are constantly improving the technology to distinguish between humans and machines (it seems like a contest of human brains versus human brains).

Returning to the topic, why does AI struggle with sliding CAPTCHAs that apply user behavior analysis? Theoretically, behavior trajectories can also be cracked using deep learning, but this requires a large number of training samples and sufficient training time.

The author believes that AI databases should ideally obtain ample private information about the user's operation behavior in advance, which is undoubtedly strictly protected by websites, making training samples difficult to obtain. Additionally, sliding CAPTCHAs often have time constraints, and current AI technology is far from being able to simulate individual user behavior in a short time.