Development of AI and MT ---- EBMT

Example-Based Machine Translation: EBMT

Machine translation did not succumb to the second AI winter. During this challenging period for artificial intelligence, machine translation researchers began to adjust their strategic goals, swiftly achieving a strategic shift. In the late 1970s and early 1980s, the AI field experienced what is known as the "second AI winter." Due to the early AI research failing to meet expectations, government and corporate investments in AI sharply declined, causing research progress to stagnate. However, during this time, the machine translation (MT) field did not come to a complete halt. On the contrary, MT researchers rapidly adjusted their strategic goals and explored new translation methods to tackle these challenges.

Japan, in particular, had a strong interest in machine translation. Although Japan did not experience the Cold War, very few Japanese people spoke English at the time. This posed a significant issue for the impending globalization, providing strong motivation for the Japanese to find a method for machine translation. Translating from English to Japanese based on rules was exceedingly complex. The grammatical structures of Japanese were entirely different, requiring all words to be rearranged and new words to be added. In this context, Japanese computational linguist Makoto Nagao proposed the concept of Example-Based Machine Translation (EBMT) and suggested the idea of "using pre-prepared phrases to avoid repetitive translation." In 1984, Nagao presented his famous lecture at the International Conference on Theoretical and Methodological Issues in Machine Translation of Natural Languages (TMI) held in Kyoto, Japan, where he elaborated on the concept and methodology of EBMT.

This conference's lecture and related paper marked the formal introduction of the EBMT concept. Nagao's paper, titled "A Framework of a Mechanical Translation between Japanese and English by Analogy Principle," published in the TMI conference proceedings, detailed the core ideas of EBMT.

The core idea of EBMT is to use existing translation examples for matching and analogy to generate new translations. This method is derived from the observation of human translation processes: when faced with complex or unfamiliar sentences, human translators often refer to and borrow from previously translated sentences or phrases. Nagao proposed that by constructing a large bilingual example library, a machine translation system could, when needing to translate a new sentence, retrieve and match similar translation examples from the library, use these examples for analogy and reorganization, and thus generate new translations.

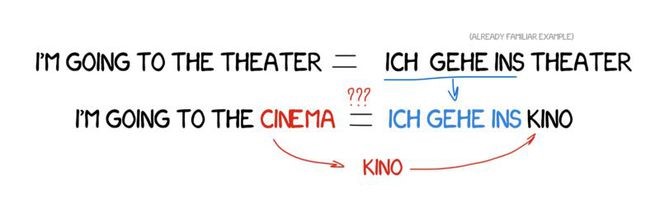

Imagine needing to translate a simple sentence like "I’m going to the cinema." If a similar sentence, "I’m going to the theater," has already been translated, and the word "cinema" can be found in a dictionary, the task is to identify the differences between the two sentences, translate the differing word without disrupting the sentence structure. The more examples available, the better the translation quality.

Using the same method, one could construct sentences in a completely unfamiliar language.

Nagao's EBMT method mainly includes the following steps:

1. Establishing an Example Library:

Collecting a large number of high-quality bilingual sentences to form a comprehensive example library. This library needs to cover various types of sentences, structures, and contexts.

2. Similarity Matching:

When translating a new sentence, the system retrieves similar translation examples from the example library. Similarity matching can be based on multiple dimensions such as vocabulary, syntactic structure, and semantics.

3. Analogy Generation:

Using the retrieved similar examples, the system generates new sentence translations through analogy and reorganization. This process includes adjusting vocabulary and syntactic structures to ensure that the generated translation conforms to the target language's norms and habits.

The EBMT method has several advantages and limitations. The main advantage is the efficient use of existing translation examples, which can address some challenges in rule-based and statistical methods. For irregular or complex sentences, EBMT can provide more flexible solutions through example matching. Compared to rule-based machine translation (RBMT), EBMT has lower development costs as it does not require extensive rule-writing, just a sufficiently large example library. However, EBMT also has limitations. Its performance heavily relies on the size and quality of the example library, with lower effectiveness for low-resource languages. During the matching process, it may fail to provide accurate translations for sentences not found in the example library. Additionally, sentence reorganization and adjustment during new translation generation can lead to grammatical and semantic errors. Despite these limitations, EBMT offers an important new approach to the field of machine translation.

Makoto Nagao's EBMT idea has had a profound impact on the field of machine translation. By introducing the example library and analogy methods, EBMT breaks through the limitations of rule-based methods, offering a new translation approach that does not depend on complex rule systems but instead generates translations through example matching and analogy. This method has opened new directions for machine translation research, promoting the development of hybrid translation methods. Modern machine translation systems often combine rule-based, statistical, and example-based methods, leveraging each approach's strengths to improve translation quality. Furthermore, the EBMT concept has inspired research in other fields such as natural language processing, information retrieval, and question-answering systems, providing new ideas and methods for these areas.

Despite the "second AI winter" in the 1980s, machine translation researchers adjusted their strategic goals and pioneered new research directions. Makoto Nagao's 1984 proposal of Example-Based Machine Translation (EBMT) provided a novel method for machine translation, achieving more flexible and efficient translation through the use of existing translation examples. This concept has achieved notable success in the field of machine translation and has had a profound impact on the entire natural language processing field.

EBMT brought a ray of hope to scientists worldwide: it demonstrated that machine translation could be achieved by feeding existing translations into the machine without spending years establishing rules and exceptions. Although not a radical transformation, this method was clearly a significant step forward. Just five years later, the revolutionary invention of Statistical Machine Translation (SMT) emerged.